Introduction

Serverless computing is transforming the way applications are developed, deployed, and managed. With cloud providers like AWS, Azure, and Google Cloud offering powerful serverless architectures, businesses are questioning whether traditional hosting will remain relevant. But is serverless truly the future of cloud computing, or will traditional hosting models continue to play a role?

This article explores the evolution of serverless computing, its advantages and challenges, and whether it signals the end of traditional hosting as we know it. Additionally, we will examine its future potential and how companies can adapt to this emerging paradigm shift.

What is Serverless Computing?

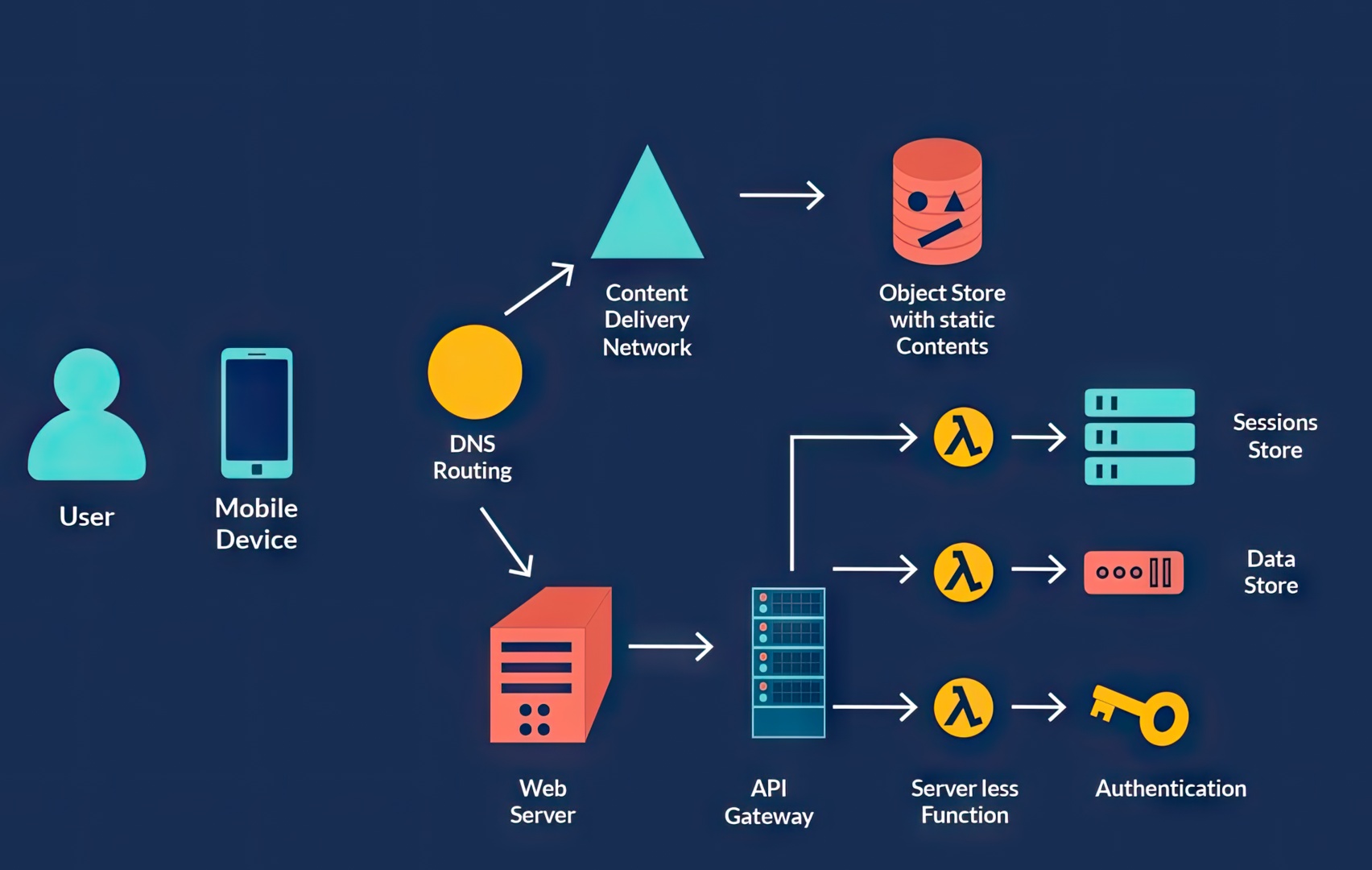

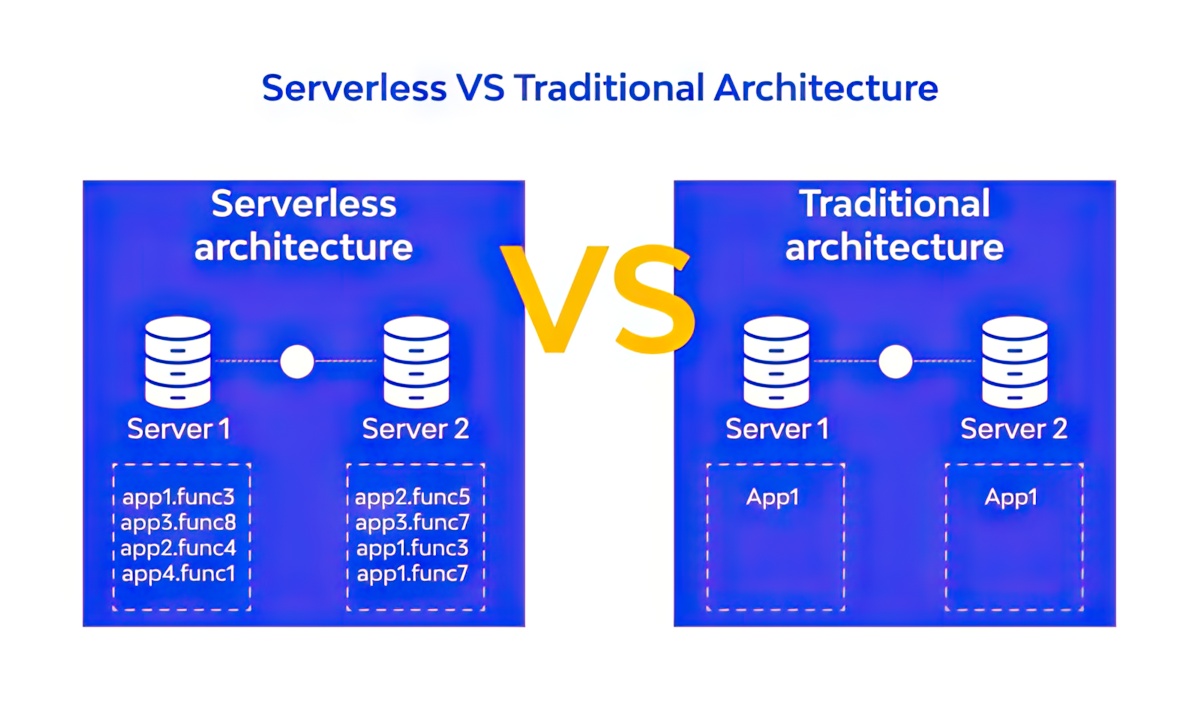

Despite its name, serverless computing doesn’t mean there are no servers. Instead, it refers to a cloud-native architecture where developers can build and run applications without managing the underlying infrastructure. Serverless computing automates server provisioning, scaling, and maintenance, allowing businesses to focus purely on code and business logic.

Key Characteristics of Serverless Computing:

- Automatic Scaling: Applications scale up or down dynamically based on demand.

- Event-Driven Execution: Functions execute in response to specific triggers (e.g., API calls, database changes, or user actions).

- Pay-Per-Use Pricing: Users are billed only for the execution time and resources consumed, reducing costs.

- No Server Management: Cloud providers handle infrastructure operations, updates, and security.

- High Availability: Serverless functions are replicated across multiple availability zones for maximum uptime.

How Serverless Computing is Changing the Game

1. Eliminating Infrastructure Management

Traditional hosting requires businesses to provision, configure, and maintain servers. Serverless computing removes this overhead, allowing developers to focus entirely on application development. This is especially beneficial for startups and agile teams that want to deploy quickly without worrying about server maintenance.

2. Cost Efficiency and Resource Optimization

Unlike traditional hosting models that charge for reserved server capacity, serverless computing operates on a pay-as-you-go model. Organizations only pay for the actual execution of their applications, leading to significant cost savings—especially for applications with sporadic traffic. This model prevents over-provisioning and reduces wasted computing power.

3. Seamless Scalability

In traditional hosting, businesses must predict their traffic patterns and provision servers accordingly. Serverless architectures, however, scale dynamically without human intervention. Whether an application receives 10 or 10,000 requests per second, the cloud provider automatically adjusts the required resources. This makes it ideal for applications with unpredictable workloads.

4. Faster Deployment and Innovation

With no need to manage infrastructure, developers can release new features and updates faster than ever before. Serverless computing enables rapid iteration, making it an ideal solution for microservices-based architectures and applications that require frequent updates. Businesses can innovate faster by reducing deployment complexity and focusing on application functionality.

5. Built-in Security and Compliance

With traditional hosting, security management requires manual updates, patching, and configurations. Serverless providers handle most security responsibilities, including automatic patching, encryption, and compliance with industry regulations. However, companies must still implement application-level security practices to protect sensitive data.

Challenges of Serverless Computing

Despite its advantages, serverless computing is not without its challenges. Here are some potential drawbacks businesses need to consider:

1. Cold Starts and Latency Issues

When a serverless function is executed after a period of inactivity, it experiences a cold start, leading to increased latency. For applications requiring low-latency responses, such as real-time gaming or financial transactions, cold starts can pose a significant challenge.

2. Vendor Lock-In

Serverless computing heavily relies on cloud providers. Applications built using AWS Lambda, for instance, may require significant refactoring to migrate to another provider, creating dependency risks. To avoid vendor lock-in, businesses should adopt multi-cloud strategies and utilize open-source serverless frameworks like OpenFaaS or Knative.

3. Limited Execution Time

Many serverless functions have execution time limits (e.g., AWS Lambda has a maximum duration of 15 minutes). This makes serverless unsuitable for long-running processes such as batch processing, artificial intelligence workloads, or high-performance computing tasks.

4. Security and Compliance Risks

Traditional hosting gives businesses greater control over security policies. In serverless computing, security is largely managed by cloud providers, raising concerns about data privacy, compliance, and multi-tenancy risks. Organizations must carefully assess cloud provider compliance certifications and implement zero-trust security models.

Will Serverless Replace Traditional Hosting?

While serverless computing is gaining traction, it is unlikely to completely replace traditional hosting in the near future. Instead, we may see a hybrid model where serverless and traditional hosting coexist based on use cases.

When to Use Serverless:

- Applications with inconsistent traffic that benefit from dynamic scaling.

- Event-driven workflows (e.g., IoT applications, API gateways, and background tasks).

- Microservices-based architectures where function-as-a-service (FaaS) enables modular development.

- Startups and businesses looking to minimize infrastructure management costs.

When Traditional Hosting Still Makes Sense:

- Applications requiring consistent performance with minimal latency.

- Workloads with long-running processes or high memory consumption.

- Enterprises needing greater control over infrastructure security and compliance.

- Legacy applications that may not be easily migrated to a serverless architecture.

The Future of Serverless Computing

The adoption of serverless computing is accelerating, and future innovations will likely address existing limitations while making serverless even more appealing.

Key Trends to Watch:

- Edge Computing and Serverless Integration – Processing closer to users will reduce latency and improve performance, making serverless viable for real-time applications.

- AI-Powered Serverless Platforms – Smarter workload optimization will make serverless computing even more efficient by auto-adjusting resources based on machine learning insights.

- Multi-Cloud Serverless Solutions – Avoiding vendor lock-in with cross-cloud compatibility will become a priority for enterprises.

- Improved Security Models – Enhanced serverless security frameworks will mitigate compliance risks and protect against data breaches.

- Event-Driven Data Pipelines – More businesses will shift toward event-driven architectures to process large-scale data streams efficiently.

Conclusion

Serverless computing is not the end of traditional hosting, but it is reshaping the way applications are built and deployed. While traditional hosting will remain relevant for specific use cases, serverless will continue to gain traction, especially for businesses prioritizing scalability, cost efficiency, and agility.

For companies looking to future-proof their infrastructure, a hybrid approach—leveraging the strengths of both traditional hosting and serverless computing—may be the most strategic option. Organizations that adopt serverless-first strategies while maintaining flexibility for traditional hosting will be best positioned for the evolving cloud landscape.

🚀 Is your business ready for the serverless revolution? Let us know your thoughts in the comments below!