In the rapidly evolving landscape of artificial intelligence, multimodal machine learning stands at the forefront of innovation, promising to revolutionize how machines perceive and interact with the world. Unlike traditional AI systems that process single types of data, multimodal AI can simultaneously understand and integrate multiple forms of information—text, images, audio, and video—creating more powerful and human-like intelligence systems that are reshaping industries and opening new frontiers for technological advancement.

Understanding the Power of Multiple Modalities

Humans naturally process the world through multiple senses. We see, hear, read, and feel our environment simultaneously, integrating these diverse inputs to form a comprehensive understanding of our surroundings. Multimodal machine learning aims to replicate this natural ability by training AI systems to process and find relationships between different types of data.

Traditional AI systems have been limited to processing single modalities—computer vision models analyze images, natural language processing models interpret text, and speech recognition systems process audio. While these unimodal approaches have achieved remarkable success in their respective domains, they fail to capture the rich, interconnected nature of real-world information.

Multimodal AI transcends these limitations by combining multiple data streams, enabling systems to develop a more holistic understanding of complex scenarios. For instance, when analyzing a video conference, a multimodal system can simultaneously process the spoken words, facial expressions, gestures, and textual information, gaining insights that would be impossible through any single modality alone.

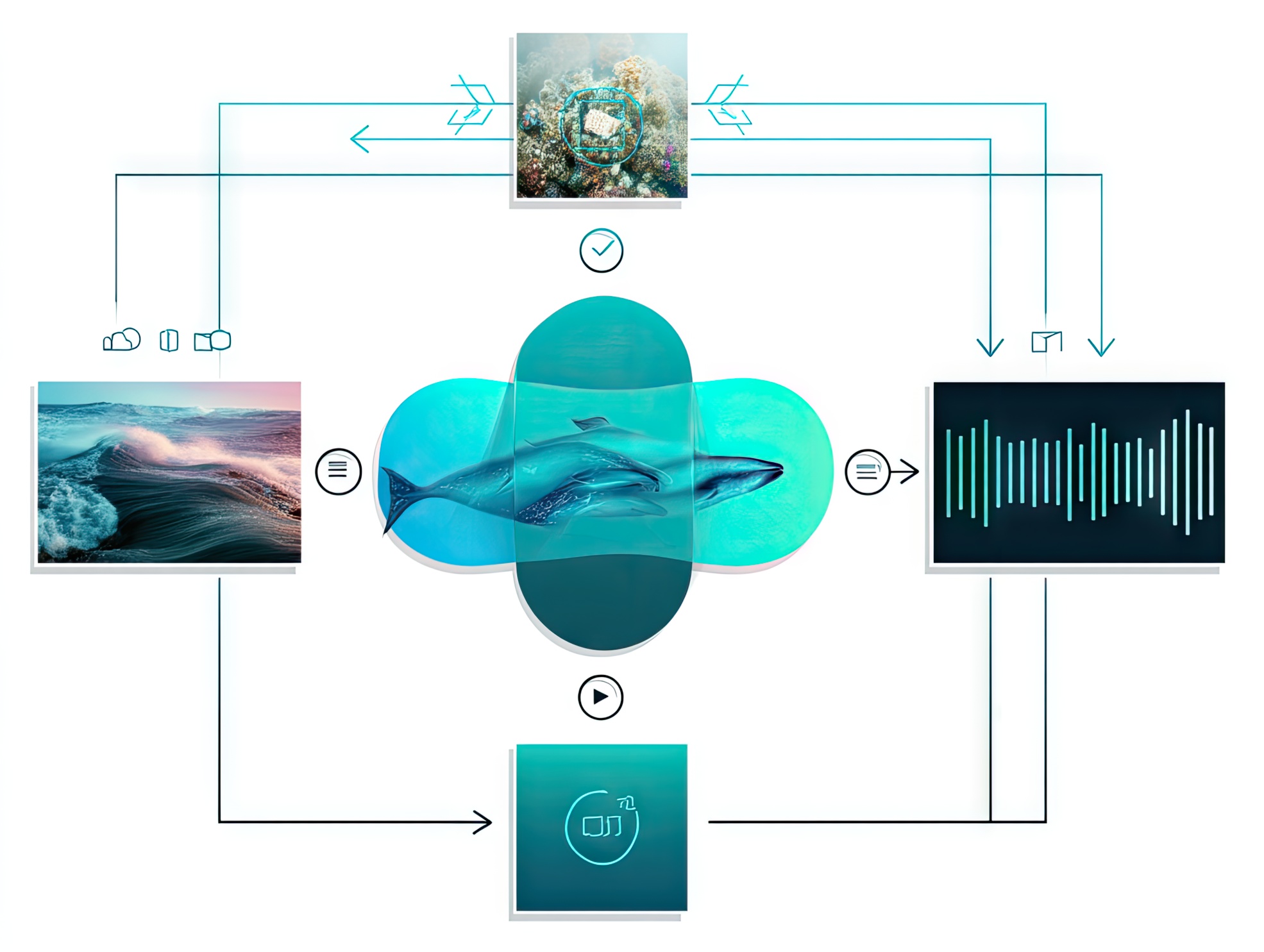

The architecture of multimodal systems typically involves specialized neural networks for each modality, followed by sophisticated fusion mechanisms that integrate these diverse representations. This integration can occur at various levels:

- Early Fusion: Data from different modalities is combined at the feature extraction stage, allowing the model to learn joint representations from the raw inputs.

- Late Fusion: Each modality is processed independently, and the results are combined only at the decision-making stage.

- Hybrid Fusion: A combination of early and late fusion approaches, allowing for more flexible integration of multimodal information.

The challenge lies in aligning these different data types—ensuring that related concepts across modalities (like the word “cat,” an image of a cat, and the sound of a meow) are represented similarly in the model’s internal space. Recent advances in representation learning and cross-modal alignment have made significant strides in addressing these challenges, paving the way for more sophisticated multimodal applications.

Core Technical Challenges in Multimodal Learning

Developing effective multimodal AI systems involves overcoming several fundamental challenges:

Representation Learning

Creating unified representations that can capture the unique characteristics of each modality while facilitating meaningful cross-modal interactions is a central challenge. Researchers have developed various approaches to multimodal representation learning:

- Joint representations encode each modality and place them into a mutual high-dimensional space, directly combining features from different sources.

- Coordinated representations encode modalities separately but coordinate them through constraints, ensuring that related concepts across modalities are represented similarly.

Multimodal Fusion

Effectively combining information from different modalities is perhaps the most critical aspect of multimodal learning. Beyond the early, late, and hybrid fusion approaches mentioned earlier, researchers are exploring attention-based mechanisms that dynamically weight the importance of different modalities based on context. Transformer architectures, which have revolutionized natural language processing, are now being adapted for multimodal fusion, enabling more sophisticated integration of diverse data types.

Alignment

Identifying direct relationships between elements in different modalities—such as matching a sentence to the corresponding segment of a video or aligning audio with visual lip movements—remains a significant challenge. Recent advances in contrastive learning, where models are trained to distinguish between related and unrelated pairs of multimodal data, have shown promising results in addressing this challenge.

Translation

Converting information from one modality to another—such as generating image captions or creating visualizations from textual descriptions—requires sophisticated understanding of both source and target modalities. Generative models like GANs (Generative Adversarial Networks) and diffusion models have enabled remarkable progress in cross-modal translation tasks.

Transforming Industries Through Integration

The applications of multimodal AI are already transforming key industries, creating new possibilities and enhancing existing processes:

Healthcare Revolution

In healthcare, multimodal systems are changing how diseases are diagnosed and treated. By analyzing patient records alongside medical images, genomic data, and sensor readings, these systems can identify patterns and correlations that might escape even experienced clinicians.

- Diagnostic Accuracy: Multimodal systems that combine medical imaging with patient history and laboratory results have demonstrated superior accuracy in diagnosing conditions ranging from cancer to neurological disorders. Companies like Cohere Health are pioneering these approaches, developing platforms that integrate diverse clinical data sources to improve diagnostic precision.

- Remote Monitoring: Wearable devices that track multiple physiological parameters can feed data to multimodal AI systems that detect subtle changes indicating potential health issues, enabling earlier intervention and personalized care.

- Drug Discovery: By analyzing molecular structures, genetic data, and clinical trial results simultaneously, multimodal AI is accelerating the identification of promising drug candidates and predicting their efficacy and safety profiles.

Reinventing Customer Experiences

Customer service and retail experiences have been revolutionized through multimodal AI systems that can simultaneously process text queries, voice intonation, facial expressions, and behavioral data to provide more personalized and responsive service.

- Omnichannel Support: Modern customer service platforms integrate conversations across text, voice, and video channels, maintaining context and continuity regardless of how customers choose to communicate.

- Emotion Recognition: By analyzing facial expressions, voice tone, and language choice, multimodal systems can detect customer emotions and adapt responses accordingly, creating more empathetic interactions.

- Personalized Shopping: In retail, multimodal AI combines visual recognition of products, analysis of shopping behavior, and processing of customer preferences to create highly personalized recommendations and experiences.

Manufacturing and Supply Chain Optimization

Manufacturing benefits from multimodal AI’s ability to analyze visual inspection data alongside performance metrics and sensor readings, enhancing quality control and predictive maintenance capabilities.

- Quality Assurance: Visual inspection systems combined with acoustic analysis and performance data can detect defects and anomalies with greater accuracy than any single-modality approach.

- Predictive Maintenance: By monitoring equipment through multiple sensors and analyzing the resulting data streams together, multimodal AI can predict failures before they occur, reducing downtime and maintenance costs.

- Supply Chain Visibility: Integrating data from IoT devices, transportation systems, inventory management, and demand forecasting enables more resilient and responsive supply chains.

Content Creation and Media

The creative industries are being transformed by multimodal AI systems that can generate and manipulate content across different media types:

- Automatic Video Creation: Systems that can understand text prompts and generate corresponding video content are revolutionizing content creation for marketing, education, and entertainment.

- Cross-Modal Editing: Tools that allow editing of one modality (such as text) to automatically update related content in another modality (such as images or video) are streamlining creative workflows.

- Content Personalization: Media companies are using multimodal AI to analyze viewer preferences across different content types and formats, delivering highly personalized recommendations and experiences.

Market Growth and Future Trajectory

The economic impact of multimodal AI is substantial and growing rapidly. According to recent market research, the global multimodal AI market was valued at $1.6 billion in 2024 and is projected to grow at a CAGR of 32.7% through 2034, reaching approximately $27 billion by the end of the forecast period.

This remarkable growth is driven by several factors:

Increasing R&D Investments

Major tech companies are making unprecedented investments in AI research and development. Meta, Amazon, Alphabet, and Microsoft plan to allocate up to $320 billion combined for AI development in 2025, a significant increase from $230 billion in 2024. A substantial portion of this investment is directed toward multimodal capabilities, reflecting the strategic importance of this technology.

Expanding Application Landscape

As multimodal AI matures, its applications are expanding beyond traditional domains like healthcare and retail into new areas such as:

- Environmental Monitoring: Combining satellite imagery, sensor data, and climate models to track environmental changes and predict natural disasters.

- Smart Cities: Integrating traffic cameras, noise sensors, air quality monitors, and citizen feedback to optimize urban infrastructure and services.

- Education: Creating personalized learning experiences that adapt to students’ learning styles by analyzing their interactions across different educational media and formats.

Technological Convergence

Multimodal AI is increasingly converging with other emerging technologies, creating powerful new capabilities:

- IoT Integration: The proliferation of Internet of Things (IoT) devices is generating vast amounts of multimodal data that can be leveraged for applications ranging from smart homes to industrial automation.

- Edge Computing: Advances in edge computing are enabling multimodal AI systems to process data locally, reducing latency and enhancing privacy for applications like autonomous vehicles and wearable devices.

- 5G and Beyond: High-speed, low-latency networks are facilitating the real-time integration of multimodal data streams from distributed sources, enabling new applications in areas like remote surgery and immersive virtual experiences.

The Road Ahead: 2025 and Beyond

Looking toward the future, several key trends are shaping the evolution of multimodal AI:

Agentic AI Systems

Autonomous AI agents that can operate with minimal human supervision are becoming more sophisticated. Models like OpenAI’s o1 and Anthropic’s Claude are leading this trend, demonstrating the ability to:

- Plan and Execute Complex Tasks: Breaking down objectives into manageable steps and executing them across different modalities.

- Adapt to Changing Environments: Monitoring multiple data streams to detect changes and adjust strategies accordingly.

- Collaborate with Humans and Other AI Systems: Communicating effectively through various channels to work alongside human teams and other AI agents.

These agentic systems represent a significant evolution from reactive AI models, moving toward proactive assistants that can anticipate needs and take initiative.

Enhanced Data Fusion and Cross-Modal Reasoning

Advances in neural network architectures are improving how AI systems integrate information across modalities:

- Multimodal Transformers: Extending the transformer architecture beyond text to handle multiple modalities simultaneously, enabling more sophisticated cross-modal attention mechanisms.

- Neural-Symbolic Integration: Combining neural networks’ pattern recognition capabilities with symbolic reasoning to enable more robust logical inference across modalities.

- Few-Shot and Zero-Shot Learning: Reducing the need for extensive labeled multimodal datasets by enabling models to learn from limited examples or transfer knowledge across domains.

These advances are creating AI systems with more coherent understanding and reasoning capabilities, able to draw connections between concepts across different modalities in ways that more closely resemble human cognition.

Ethical and Responsible Development

As multimodal AI becomes more powerful and pervasive, ensuring its ethical and responsible development is increasingly important:

- Fairness and Bias Mitigation: Addressing biases that may be amplified when combining multiple data sources and modalities.

- Privacy Protection: Developing techniques for privacy-preserving multimodal learning that can extract insights without compromising sensitive information.

- Transparency and Explainability: Creating methods to explain how multimodal systems arrive at their conclusions, especially for high-stakes applications in healthcare, finance, and law.

- Environmental Sustainability: Reducing the computational resources required for training and deploying multimodal models to minimize their environmental impact.

Preparing for a Multimodal Future

As multimodal AI continues to evolve, organizations and individuals can take several steps to prepare for and benefit from this transformative technology:

For Businesses

- Data Strategy: Develop strategies for collecting, integrating, and leveraging multimodal data across the organization.

- Talent Development: Invest in training and recruiting professionals with expertise in multimodal AI and its applications.

- Use Case Identification: Identify specific business challenges that could benefit from multimodal approaches, prioritizing those with the highest potential impact.

- Ethical Framework: Establish guidelines and governance structures for the responsible development and deployment of multimodal AI systems.

For Developers and Researchers

- Interdisciplinary Collaboration: Foster collaboration between experts in different domains (computer vision, NLP, audio processing) to advance multimodal research.

- Benchmark Development: Create comprehensive benchmarks that evaluate multimodal systems across diverse tasks and scenarios.

- Tool and Framework Enhancement: Develop more accessible tools and frameworks for building multimodal applications, reducing the barrier to entry.

For Society and Policymakers

- Education and Awareness: Promote understanding of multimodal AI’s capabilities, limitations, and implications among the general public.

- Regulatory Frameworks: Develop adaptive regulatory approaches that ensure safety and fairness without stifling innovation.

- Inclusive Development: Ensure that multimodal AI benefits diverse communities and addresses a wide range of societal challenges.

Conclusion

Multimodal machine learning represents a significant leap forward in artificial intelligence, moving us closer to systems that can perceive and understand the world in ways that more closely resemble human cognition. By integrating multiple types of data and learning the complex relationships between them, these systems are unlocking new capabilities and applications across industries.

As we look toward 2025 and beyond, the continued advancement of multimodal AI promises to transform how we interact with technology, how businesses operate, and how we address complex societal challenges. For businesses and developers looking to stay ahead of the curve, investing in multimodal AI capabilities now will be crucial for future competitiveness in an increasingly AI-driven world.

The journey toward truly intelligent systems that can seamlessly integrate diverse forms of information is just beginning, but the progress already made suggests a future where AI can engage with the world’s complexity in increasingly sophisticated and helpful ways.

Learn more about multimodal deep learning | Explore real-world applications | Discover future trends in multimodal AI